Who decides when AI acts on your behalf?

We're still adjusting to a post-AI world, and with that comes a shift from a screen-based internet to something more ambient. It will spread across your devices, be projected into your physical and digital environments, and biometrically sensed in your body. You won't need to click or type or talk as much because these new ecosystems will interpret what you want from context, patterns, and signals you're not consciously sending.

That part is already being developed around the world. But what isn't solved yet is who gets paid, who decides the rules, and who is accountable when software starts acting on your behalf.

From Commands to Intent

Most software today waits for instructions, things like typed search terms, button clicks, and scrolling through menus. The next version of this works differently.

We all have personal accounts. You might be reading this through some sort of account right now. But that definition is expanding to include you and one of your trusty personalized AI agents, which interprets intent from:

Context: time, place, devices nearby

Behavior: what you usually want in this state

Signals: pauses, focus, movement, biometrics

Accessing content, media, and services can be specifically chosen, but it's also very likely this access will be generated, assembled, or modified specifically for you in the moment.

The Quiet Negotiation You Don't See

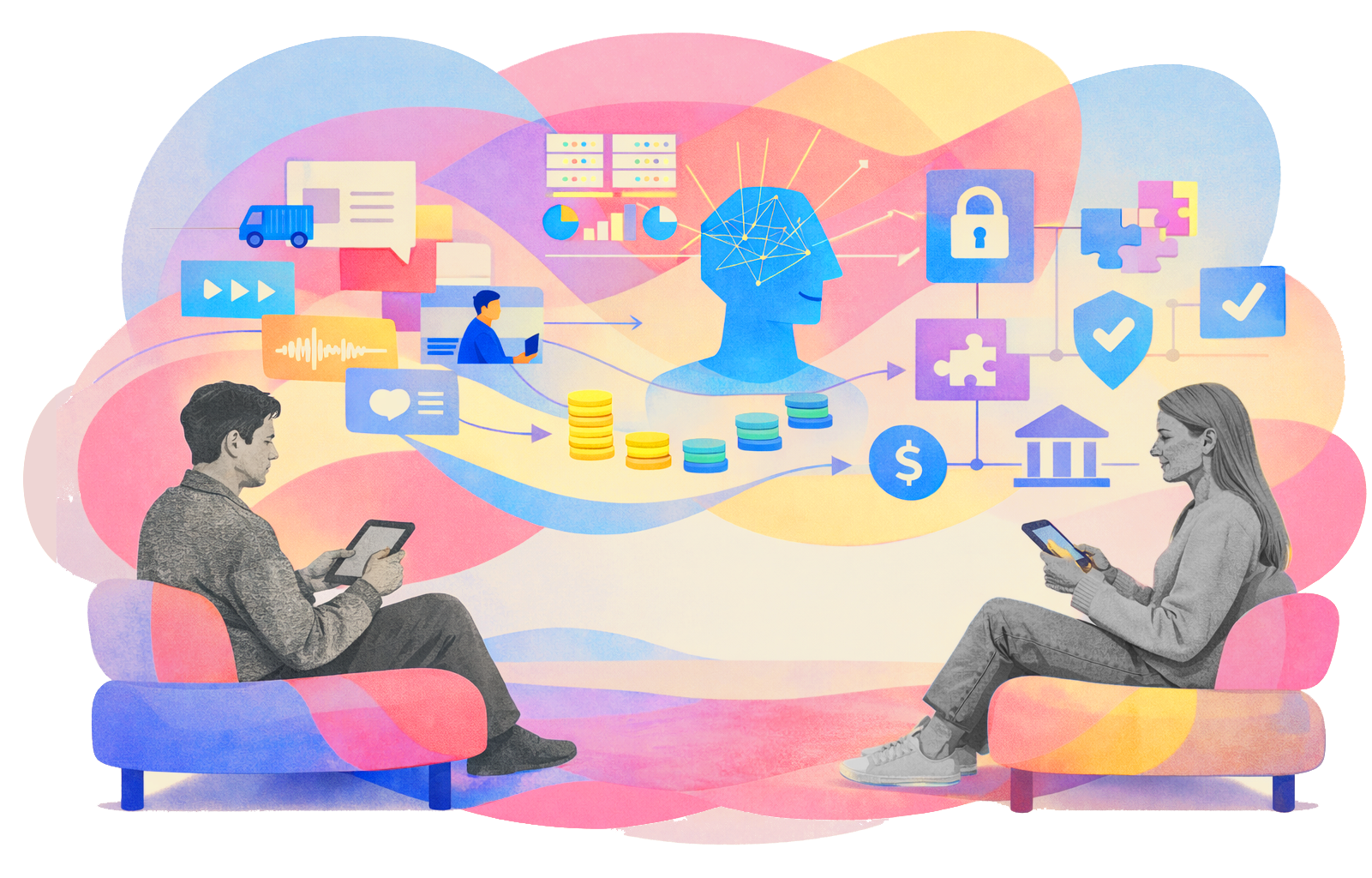

Creating those experiences involves a negotiation between three parties:

Your AI agent, acting in your interest Creator systems, representing work that's being used, including checking attribution/lineage paths if content was remixed from multiple sources Platforms, coordinating delivery and monetization

That negotiation is already happening when we use AI today, but it's just not being handled well. It's new and unregulated, so the latest AI platforms do whatever they can get away with, which is a lot.

As a result, creators aren't receiving fair credit or compensation. There was a recent study indicating that when AI systems assemble content from multiple sources, original creators are not paid any form of compensation 95% of the time. That's even in cases when their work is clearly identifiable in the output. This is not the user's fault, it's the AI system's fault for not giving users enough control in the process.

What Should Exist

If agent-mediated experiences are going to scale without breaking trust, three things can't be optional:

1. Privacy by Architecture

Your agent has to protect what it reveals about you, even when more data might improve the experience. Some contextual info is helpful ("user23945823 prefers calm content in the evening"), but too much ("heart rate elevated, user1394134 just saw a hot pic") is counterproductive and unnecessarily feeds the surveillance economy.

Your agent should be able to bring you relevant content without exposing or using too much of your personal data.

2. Automatic Attribution and Payment

If content is used, either in its original, remixed, or reassembled form, contributors should get paid by default, not by exception. And they shouldn't have to wait for a net 30 invoice.

This means tracking usage through remix chains: if a video essay uses clips from five sources, all six parties (the essay creator plus the five source creators) should get fractional payments automatically. The basic infrastructure for this exists today in the fintech and ad tech worlds, where micropayments can settle transactions in under 200 milliseconds.

3. Compliance and Reg-Tech

This is not fun to talk about at parties, but having rules that AI agents can't violate is pretty important. Rules can vary by individual or region, but there should be a clear core set that exists beyond soft suggestions.

Here's an example: Your AI agent can use biometric and contextual signals to improve an experience, but it shouldn't be allowed to secretly trade those signals away for preferential pricing or access unless you're cool with it. Creator agents can't be tricked to accept less than their minimum rate. Platform agents can't serve content that violates regional compliance rules.

These constraints need to be encoded in the negotiation process and we should be able to audit these transactions regularly.

We've Seen This Movie Before

When music moved from CDs to MP3s, distribution scaled faster than payment infrastructure. The result was chaos, followed by streaming services that finally made licensing and royalties work at scale.

AI agents assembling media from your accounts and from across the internet will follow the same pattern, except much faster. The difference this time is that we can see the gap before it breaks things.

The foundations already exist: real-time payments between systems, automatic licensing when content is used, and global settlement that doesn't rely on slow legacy rails. What we really need is a connective tissue to coordinate these existing and new systems together.

What Happens Next

Agent-driven experiences are becoming the next platform layer. Will they concentrate power by default, or operate inside systems with built-in payment, consent, and accountability?

If we build the right infrastructure, AI becomes a multiplier. If we don't, a handful of platforms will set the rules, capture the value, and clean up the mess later.